Why complex, data demanding and difficult to estimate agent based models? Lessons from a decades long research program

- Article

- Figures and data

-

Jump to

- Abstract

- 1. Introducing old problems in new ways

- 2. The reference model economy

- 3. Terminology – many labels for similar apps

- 4. What are these complex, data demanding and difficult to estimate agent-based economic systems models good for?

- 5. Surprise economics (theory)

- 6. Economy wide long-term social costs and benefits of micro interventions in an economy (quantification)

- 7. Economic historical studies

- 8. Generalising from case studies to macro

- 9. Conclusions

- Footnotes

- References

- Article and author information

Abstract

Questions are often raised about the economy-wide long-term consequences of micro (policy) interventions in an economy. To answer such questions, complex high dimensional, agent-based and difficult to estimate models are needed. This soon takes the economist outside the range of mathematics s/he is comfortable with. Such was, however, the ambition of the Swedish micro firm to macroeconomic systems modelling project presented in this essay, initiated almost 45 years ago. This model is presented as a Reference model for theoretical and empirical studies, with the ambition to understand the roles of the “birth, life and death” of business agents in the evolution of a self-coordinated market economy, without the help of a Walrasian central planner. Mathematical simulation is argued to be the effective tool for such theoretical and empirical analyses. Four problems have been chosen that demand a model of the Reference type to address, and that the Reference model has been used to address. They are (1) exploring the interior structures of the model for surprise analytical outcomes not previously observed, or thought of; (2) quantifying the long-term costs and benefits of large structure changing micro-interventions in the economy; (3) studying long-term historical economic systems evolutions, and asking what could have happened alternatively, if the historic evolution would have originated differently, and (4) generalizing from case observations.

1. Introducing old problems in new ways

In the wake of the Keynesian revolution, large-scale macroeconometric modelling became the big business of the economics profession. There were business cycle macro models, and input-output sector models of the Keynesian & Leontief (K&L) type driven by demand, the ultimate manifestation of which was perhaps the Brookings Model of the 1970s, managed by Prize winner Lawrence Klein. This model was eventually linked up with a collection of other national and similarly structured models to an enormous global model.

It did not take long, however, for critical comments to emerge, notably about the unclear microfoundations of such macro models, the wasteful neglect of existing microdata that came with aggregation, and in general their empirical credibility and meaningfulness. What could they really be used for?

The microsimulation approach pioneered by Orcutt (1957, 1960, 1980) was one response to this critique. Orcutt’s disapproved of the erroneous assumptions underlying macro modelling. Only under extreme and unworldly assumptions about “elemental decision-making units” would macro relationships be theoretically acceptable, but those aggregates meant “a disastrous loss of accuracy of representation”. Klevmarken (1978, 1983) also regretted the unnecessary loss of available microinformation that came with aggregation for macro modelling, and that simulation experiments on the Swedish agent-based macroeconomic model, referred to as the “Reference model” in what follows (Section 2), already at that time had obviated. This critique was reiterated in the 1977 Stockholm microsimulation conference, perhaps the first ever, where Bergmann, Eliasson, and Orcutt (1980) stated “that a criterion of good theory in economics can only be how well-grounded in relevant, empirical facts it is” (op. cit. p. 12), and the obvious fact is that economic agents such as individuals, households and business firms are different, and that the role of this heterogeneity should be recognized in good modelling. On this Citro & Hanushek (1997) argued that sufficient knowledge of micro unit behaviour (in their case households) was not yet available for accurate specification of Micro based Macro systems models. Research should, therefore, focus on obtaining better data and estimation procedures, and less on modelling based on assumptions.

Empirically well-grounded economic systems models featuring heterogeneous agents competing in dynamic markets, and growth through endogenous entry and exit (“selection”) soon run up against difficult problems of achieving statistically controlled parameter estimates. The modeller therefore often chooses to simplify the model, and instead faces the perhaps even more serious problem of mis-specification. With sufficient data and enormous future computer capacity the estimation problems of complex, selection based and non-linear models should, however, in principle be solvable. A richly parameterised model would therefore be the right reference for empirically credible and open-minded theorising (see Section 5 on Surprise Economics). Then, there would be no need for macro models at all, since the process of aggregating behaviour in markets would be endogenised within the micro-based model, and aggregation would be reduced to summing up the outputs at the end. There would even be reasons to expect that most macroeconomic relationships would not be compatible with the inherent relationships of an empirically well-grounded micro-based economic systems model (see Section 3). However, the ambition of empirical credibility may still be out of reach as of today, implying a need to state objectively how to make empirical sense of less than perfectly calibrated complex models (Eliasson, 2016, 2017a; Hansen & Heckman, 1996).

The Citro & Hanushek (1997) observation of lack of knowledge about household behaviour was even more appropriate for the state of knowledge about business firm behaviour. While the birth, the life and the death of individuals concern well-defined entities, the life of business firms is that of constant structural transformation through mergers, acquisitions and divestitures. And indeed, demand for a better understanding of the nature and roles played by business firms in long-term economic development was the chief reason for initiating the project that led up to the Reference model of this essay (Section 2). Likewise, early on questions were asked about the economy-wide, long-term consequences of micro-interventions in the economy, and prompted the quantitative inquiry into the Swedish industrial support program of the 1970s and 1980s, that was based on the Reference model (Section 6). In a historical perspective, seemingly minor occurrences in the distant past, like a clustering of successful entrepreneurial activities, or the establishment of a new institution that improved the allocative performance of markets, may have nudged the entire economic system onto a different historical trajectory than it would otherwise have taken (Section 7). The Reference model described in the next section (Section 2) has been found to be appropriate for the quantitative study of the economic systems consequences such very long-term courses of irreversible events between consequences and such.

During the more than 40 years course of the project leading up to the Reference model, we have studied how heterogeneous and quite ignorant agents (firms) compete in more or less open markets, thereby dynamically coordinating the evolution of the model economy along growth trajectories, the successful outcomes of which depend significantly on how agents are born (entrepreneurial entry) and die (exit) along the way to form more or less successful business clusters. These growth dynamics involve integrating familiar, often trivially true principles of economics. I conclude below that understanding such economic systems dynamics is not possible without understanding that market-based integration, and to do that mathematical simulation is the appropriate analytical tool. Even though simulation is a concept that has been subject to a large philosophical literature as computers have become increasingly capable (Fontana, 2006), for me mathematical simulation is a branch of mathematics that is particularly suitable for addressing complex theoretical and empirical economic systems problems.

With this paper I, therefore, start afresh from the ambitions voiced at the 1977 conference that I was myself involved in formulating: (1) one theoretical, emphasising the need for empirically well-grounded prior theory, and (2) another, concerned with the unavoidably “false” assumptions underlying macro theory (Section 3). I will ask: what kind of theoretical foundation should a relevant micro-based economic systems model stand on? How should aggregation over markets of micro behaviour be made a natural part of total economic systems dynamics? How intellectually restrictive is the economist’s standard mathematical toolbox, the calculus of optimisation? What analytical potential does simulation mathematics offer in enhancing the understanding of economic behaviour? How can existing, often diverse and taxonomically incompatible microdata be combined for maximum information access? Perhaps most important of all: what usefulness is added by those complex, analytically non-transparent and difficult to estimate micro-based economic systems models when there are so many analytically transparent and familiar partial models, the parameters of which can be readily estimated?

In the choice between well-grounded, and easy to estimate models, complexity therefore often becomes an accepted excuse to simplify the model on a “false” partial, static or linear format, resulting in an unclear mixture of inaccurate specification, badly defined behavioural parameters, and doubtful empirical credibility about what the analysis has to say.

What kind of trade-off is there between perfectly estimating the wrong model, and imperfectly estimating (“calibrating”) a Micro based Macro economic systems model, that is based on empirically well-grounded prior assumptions?

One example: Currently worries of technological mass unemployment, or “The March of the Machines” has returned in the economic debate. This time artificial intelligence (AI) causes worry (The Economist, 2016). Some 40 years ago, however, mass unemployment caused by the then new micro-electronics technologies worried even famous economists (Leontief, 1982). Since the workers destroyed the textile machines during the early industrial revolution, every half-century or so has seen technological unemployment return for debate. Since “job destruction” and “new job creation” usually occur in different industries, at different places and with a time lag, compared to the firms laying off workers because of technological advance, narrowly defined partial and static sector or macro models have little to tell on this issue. The first serious use of the Reference model occurred when the Swedish Government Committee on Computers and Electronics (DEK) asked the Industrial Institute for Economic and Social Research (IUI, now IFN), of which I had just become the director, to calculate the economy-wide long-term employment consequences under different labour market regimes, of the introduction of new microelectronic technologies (Eliasson, 1979). The conclusion was that technologically laid off labour would soon be employed elsewhere, and the socially by far most costly policy (in terms of lost output) was to prevent the introduction of new technologies and/or delay the immediate unemployment effects caused by them.

I will proceed in the next section to introduce the Reference model, a complex, evolutionary economic systems model on which the economy-wide long-term consequences of the endogenous “birth, life and death of business agents” can be studied. I will then choose four case illustrations, among many possible, the understanding of which requires such a model as a minimum, and for the study of which the Reference model has been applied. I address them in order of what I regard as important and representing great opportunities in agent-based economic modelling: (1) to evaluate the empirical credibility of “surprise outcomes” of simulations, not yet observed, or not yet thought of, because they are not subsumed within traditional and more narrowly defined partial, static, linear and/or macro models; (2) to quantify the long-term costs and benefits of large structure changing micro-interventions in the economy; (3) to study the micro origin of long-term historic evolutions of an economic system, that is addressing the question: what would have happened under alternative initial circumstances?; (4) to support generalising from case study observations.

I consider the first theoretical application especially intriguing, the upshot being that good economic theory and good models, besides being empirically credible, should comprise surprise analytical outcomes. From the beginning in 1974 the Reference model also had a dominant theoretical orientation with a strong, but novel basis in traditional economic principles. The focus was on the complex economic systems consequences of the integration of those principles, which were studied numerically using simulation mathematics. The unique dynamic properties of the Reference model originate in the integration of Schumpeterian entrepreneurial economics with the dynamics of sequential feedback of the Wicksellian/Stockholm School emphasising the difference between ex ante plans and ex post outcomes, Keynesian demand feedback of all income (wages and profits) conveyed to the household sector and taxes collected by the public sector, with a touch of Kaldorian technical change. In fact, I will argue in Section 2 that the integration of those principles in the three markets of the model is what makes up an evolutionary model.

My conclusions will also be that the use of mathematical simulation to study complex economywide dynamic agent-based economic systems theoretically and empirically is a neglected field in mainstream economics and deserves much-increased attention there.

2. The reference model economy

The Reference model has been extensively published over the years. Since recent presentations can be easily accessed, details need not be repeated here. I give a brief overview, and background on some specifics related to the four applications chosen. There are also some pertinent links to the literature of economic doctrines. Since many people have been involved in the modelling project over its soon 45-year lifespan, the reader has to excuse me for being somewhat personal at places.

2.1 Origin and background

The origin of the Reference model dates back to late 1974 when I was approached by Thomas Lindberg, then a director at IBM Sweden, who wondered why so little attention was being paid to large-scale economic modelling in Sweden and asked me to consider setting up such a model for the Swedish economy. I was at the time involved in a study on economic planning and budgeting in large western companies (published as Eliasson, 1976a) and had a pretty good idea of how to structure a model of firm decision making. My condition for engaging in such an academic modelling venture was that the model be micro firm based, explicitly representing aggregation in markets up to the macro level, and be capable of portraying the dynamics of an evolving capitalist market economy. IBM took me around the academic world to check up on what was going on in the field, visits notably reaching out from IBM’s own research centres with a social science orientation in San Jose (USA), Pisa (Italy), and Peterlee (England). One desideratum was that the model venture should not be a replication of something already achieved. There was a general agreement that this was an obvious novel approach to take. The study trips confirmed that there was no such project, excepting Barbara Bergmann’s Transactions Model of the US economy, in the research pipeline at the time. I am particularly grateful to Kenneth Arrow who helped me to get in contact with unorthodox economists in the US. Those contacts led me to Barbara Bergmann and Guy Orcutt in particular, but also to several heretic theoretical economists, among them Richard Day, Harvey Leibenstein, Herbert Simon and Sidney Winter. Winter (1964) was especially supporting in my ambition to begin building an economy wide systems model from a micro firm platform, and with a Darwinian orientation.

The deal with IBM was unlimited programming and computer support for two years. The IBM view was that computer simulation would eventually, if not soon, become the dominant mathematical tool in economics, as it was already becoming in natural sciences and engineering. I was then the head of the Economic Policy Department, and the Chief Economist of the Federation of Swedish Industries, which meant that I had a budget for statistical studies, mostly to support economic forecasting. Even though we soon realised that business cycle forecasting would not be an interesting application of this model, interest in the business community was very positive because the model, contrary to the then all pervasive macroeconomic models of academia and government, promised to confer an explicit role in the industrial and economic analysis to entrepreneurs and businesses.

The preference of the sponsors was that the model is empirically based. And I realized from the beginning that it would not be possible to secure academic funding for such a project in Sweden, so this was an opportunity not to be missed. The project so conceived was of course a reckless venture. I secured an OK from the then President of the Federation of Swedish Industries, Axel Iveroth, to proceed, and to integrate the modelling project with the business forecasting activities of my department. The University of Uppsala, and my previous professor Ragnar Bentzel, became the third party in the modelling venture.

Thanks to IBMs very effective research management practice of assigning at least one highly educated staff member on the task of being “actively interested in the project”, the IBM part of the project was successfully concluded within the two year period.1 I was especially intrigued by the ease with which I could discuss empirical relevance and technical problems of the model with IBM staff with an engineering degree. While the endogenous growth generating micro core of the model, as specified in Eliasson (1976b), has needed few modifications, the modular design of the entire model has allowed a naturally integrated extended superstructure to be built around it (see Figure 1), the monetary system being one example, later extended with an integrated stock and financial derivatives market, and a rudimentary venture capital market, as a first step towards modelling a complete innovation commercialising process, a competence bloc (Ballot, Eliasson, & Taymaz, 2006; Taymaz, 1999, and see Section 5.1).

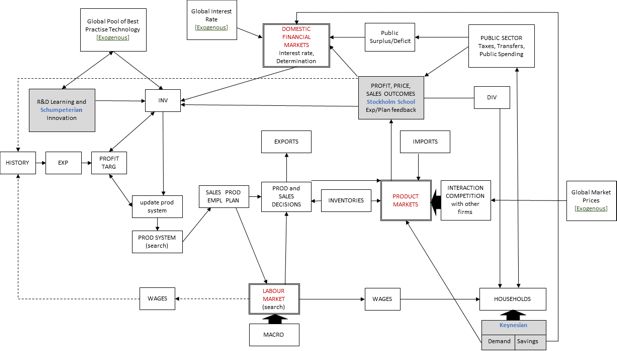

Business Decision System – one firm quarterly expectation, planning, decision and outcome feedback cycle.

Note: The figure shows the internal expectational, decision and planning sequences, and experience feedbacks of one firm model, its interfaces with external product, labour and financial markets, and exogenous technology and global price inputs. Micro firm specification is only for four manufacturing markets. The rest of production is represented by six input output sectors, all being linked together by a constantly updated input- output delivery matrix (not shown). The eleventh public sector is shown in the figure.

Source: Updated version of figure in Eliasson (1977).

DIV: dividends.

EXP: expectations.

INV: investments.

PROFIT TARG: Profit target according to MIP targeting formula.

The Reference model described in this article is constructed from bottom up on the basis of empirical evidence on the behaviour of firms collected by myself and documented in Eliasson, (1976a), which not only provided the specification of a firm model (op. cit. Chapter XI) but also the taxonomy of the consistent Micro to Macro database needed (see Albrecht et al., 1992). To feed the Reference model with a high quality microdata foundation, my department at the Federation launched what we called the “Planning Survey” to all large Swedish manufacturing firms and some smaller ones, together covering more than 80 percent of Swedish manufacturing output. Questions were formulated to be compatible with both the firm decision model and with the taxonomy of the internal statistical systems of firms, as I had learnt and documented in Eliasson (1976a). The Planning Survey also became very useful for the Federation, and the backbone of its economic forecasting activity.

Thanks to two (one after the other) very skilled computer engineers at IBM, Mats Heiman and Gosta Olavi, the model was coded (in APL) and up and running on synthetic data already in 1975, and loaded with real firm data from the Planning Survey of the Federation of Swedish Industries in 1976. The first data set 1974–1976 was published in Virin (1976), and used to run the model for my 1977 article on the economy wide consequences of regulations and competition processes in the labour market. When I became the Director of the IUI (now IFN) in 1977 the resources of the research institute became available, and over the years several researchers at the institute have on and off worked with the Reference model and its database, among them I should mention Jim Albrecht, Ken Hanson, Thomas Lindberg, and most important Gerard Ballot, Bo Carlsson and Erol Taymaz. The model was soon ready and set up for experimental, “semi empirical” applications, and continued work would largely be supported by external funding, notably for Government committee projects. The Federation, however, continued to conduct the annual Planning Survey, for its own business cycle forecasting activity.

IBM now appears to have largely abandoned its ambitions in the social science modelling field, and micro firm based economic systems modelling has taken a long-term to catch on in academia (see Section 4). The Reference model has, however, survived thanks to a number of academic researchers that have improved upon its modular structure, and used it for empirical inquiries. Gerard Ballot, Bo Carlsson, Dan Johansson and Erol Taymaz should be particularly mentioned.

Interestingly, after the IBM period the Reference model has not had a separate budget, but has been largely self-financed through government committee work and related data gathering projects. The model has found a number of useful applications, from a study for DEK, in a joint project between IVA and IUI, financed by the Swedish Board of Technical Development (STU) (Carlsson, 1981; Carlsson, Dahmén, Grufman, Josefsson, & Örtengren, 1979), which also provided a critical dataset on global best practice technology for the model, to analytical work for the DEK (Eliasson, 1979, 1980a, 1980b, 1981), to the ambitious cost benefit study on the industrial support program that will be presented in some detail below, and to several OECD studies (Carlsson, 1991; Eliasson, 1987, 1997). For several years, IUI had three economy wide models up and running; the Reference model, one highly aggregated monetary model and a Keynes & Leontief sectoral model. Since the two last models could be derived as special cases of the Reference model by aggregating up to sector levels and shutting down all the Stockholm School ex ante plans ex post outcomes dynamics, it was interesting for the long-term analysis of the Swedish economy published in 1979 (IUI, 1979) to ask the same questions to all three models and then identify the reasons for the different answers.

The most important insight, which should not come as a surprise, was the sensitivity of long-term growth trajectories to the choice of model (read its prior assumptions). While the long-term economic systems trajectories depended critically on the parameters governing market resource allocations in the Reference model, this was not the case for the other two essentially equilibrium models (IUI, 1979, pp. 52–60).2 Another insight concerned economic systems controllability. While the Reference model economy was difficult to control through available policy parameters, simulating the other two models conveyed the illusion of being in full control of the economy. Thus, for instance, pushing the Reference model economy too fast, and too far to lower unemployment, soon began to disturb the market coordination of production and investment, and resulted in worse countervailing labour market disturbances.

Even though a de-identified firm database for the year 1982 for external academic use was made available by IUI in 1992 (see Taymaz, 1992), external academic users have not been that many. Broström (2003), who set the model up for a study of the reliability of the Capital Asset Pricing Model (CAPM) under different economic systems conditions for a master’s thesis at the University of Linkoping, however, demonstrated that the model was not that technically difficult to operate. The database work for the model, notably the Planning Survey and the complete stock and flow consistent micro-based National Accounts statistics put together for one initial year, that is 1982 (Albrecht et al., 1992), furthermore, has been found useful in other unrelated research projects. Bergholm (1985) simulated the pull effects of the small number of very large and dominant engineering firms on the Swedish economy, and its exposure to possible negative market experiences of some of these firms. Dan Johansson used the model in his doctorate thesis 2001 at the Royal Institute of Technology (KTH), which was in part a study on the exposure of the Swedish economy to information and communications technologies for the Swedish Agency for Civilian Emergency Planning. In return a new firm based computer and communications (C&C) industry was introduced in the model (see Eliasson & Johansson, 1999; Johansson, 2001, 2005). Most important, however, is the capacity of theoretical reasoning offered by the Reference model. The discussion in Section 4 illustrates that complex high dimensional models of the Reference model type constantly come up with surprise phenomena that have not before been observed, but that may occur suddenly, that cannot be derived from within the confined neoclassical structures, or ideas about what may have happened that you may go and look for. My conceptualisation of an “Experimentally Organized Economy” dawned upon me during repeated simulation studies on the model, and its foundation in Stockholm School ex ante plans, ex post outcomes analysis, featuring the prevalence of more or less mistaken decisions at the micro-level, and the general ignorance of economic actors of circumstances that may challenge their very economic existence (Eliasson, 1987, 2015). Since an eleven sector computable general equilibrium (CGE) model and a Leontief & Keynesian (L&K) model can be demonstrated (see below) to be special cases of the Reference model, any user of that model can easily relate to those more familiar models, and identify and evaluate the origin of unfamiliar surprise outcomes.

The Reference model is essentially deterministic, and features phases of deterministic economic systems chaos. We have tried to keep stochastic specifications at a minimum, even though stochastic specifications were unavoidable in two places of importance: (1) the assigning of priority order in which firms search each quarter for labour in the market, including poaching on other firms, by offering higher wages, and (2) the assignment of productivity and other characteristics of new firms entering markets as drawings from empirically studied distributions of such firms.

The Reference model began life soon 45 years ago as a bottom-up open economy construct, with locally interacting (competing) heterogeneous firms, in principle across the entire economy and over time; a complex mathematical structure beyond traditional analytical tractability. Richiardi (2017) distinguishes between such models, and the more simple, partial simulation models often related to some external equilibrium skeleton that make them analytically tractable. This essay can be seen as an argument against the latter, and for the need of the former to address a number of urgent social economic problems credibly, for instance the four examples in this document. I will also make the point that simulation is a highly sophisticated mathematical tool that today makes it unnecessary to hold on to wrongly specified models to be able to use the old toolbox of economists. The Eurace@Unibi model appears to be of the new complex agent-based kind that I advocate (see for instance Dawid, Harting, Van der Hoog, & Neugart, 2016), that also incorporates many features of the original Reference model.

The main reasons for the previous limited external academic interest in models of this type are (1) their complexity, need for new methodological skills, and demands for resources, that for a long time took such modelling beyond the budgets of academic computing, (2) their demands on unique statistical data availability, and most importantly (and unfortunately), (3) not being structurally and methodologically of the kind familiar to the profession. This has also made both replication of studies, and compact article writing on the results, difficult, even though a version of the model based on a de-identified database, was made available since the early 1990s for anyone wanting to replicate the experiments.3 Early support by IBM, the Federation of Swedish Industries, and IUI made it possible to overcome most of those difficulties. However, as I will argue extensively in the main text, and have in other publications, this is the way good empirical projects in economics should be organised, will have to be conducted in the future, and I would not do it differently, should I do it again.

2.2 Theoretical foundation

The Reference model, often referred to as the MOSES model (for MOdel of the Swedish Economic System) has been extensively documented. For technical specifications, other details and further references, see Eliasson (2017b). The following brief presentation adds some theoretical aspects in direct support of the main text of this article. I do that by demonstrating how the particular properties of the model exploited in the four applications derive directly from the integration of (1) Schumpeterian/Austrian economics focusing on the role of the entrepreneur with (2) Wicksellian/Stockholm School economics, focusing on the feedback loops between ex ante plans and ex post outcomes at the micro market level.4 All micro production and income generation is then aggregated over product, labour and financial markets with complete and consistent (3) Keynesian demand feedbacks. On top of this firms tap into an exogenous global pool of best practice technology through their investment decisions. I have called this the (4) Kaldorian connection because the original specification had technological specifications similar to those in recent “Kaldorian” agent-based models.

The Schumpeter-Wicksell connection defined the basic dynamic element of the Reference model from the beginning, and drew directly on my interview study on business planning practices (Eliasson, 1976a, 1992, 2005a). Endogenous sequential Keynesian demand feedback became a necessary element in a firm based economy wide model. The tension between ex ante plans and ex post outcomes, as emphasised by the Stockholm School, added new innovative dynamics to the traditional demand feedback, in that firms were confronted each period with their expectation errors, leading to production and investment planning “mistakes”.5

Referring to the early technology upgrading of individual firm capacities as a “Kaldorian” feature may be stretching the concept a bit. Since technology and productivity advance inspired by Kaldor (1957) has become a characteristic of a class of agent-based models (see, inter alia, Lorentz & Savona, 2007), and several chapters in Cantner, Gaffard, & Neste (2009) with features similar to those of the original version of the firm model of the Reference model, for symmetry I use that nomenclature.6

The Reference model has been implemented on firm data for four Swedish manufacturing industries/markets and placed in a consistent micro firm National Accounts L&K framework of the entire Swedish economy (Albrecht et al., 1992). My focus here will however be on the theoretical foundation of the model. Even though the model generates a large number of “stylised facts”, that capacity alone is not sufficient for empirical credibility. For calibration and empirical credibility, there is a long story of its own, see Eliasson (2016, 2017a).

2.2.1 Entrepreneurial competition, firm dynamics and endogenous growth through selection — the Schumpeterian/Austrian connection

The business firm is the source of evolutionary selection and long-term growth in the Reference model; in principle, still modelled as in the first published version of the model code in Eliasson (1976b). Each firm is being exposed to competition by all other firms in product markets, and for resources in labour and financial markets. It has to respond by investing, improving its productivity, recruiting or laying off labour, modifying its scale of operations, and from Ballot & Taymaz (1998) and on, also through endogenous learning and R&D investments into incremental or radical innovations, all to maintain its targeted profitability, or, if it fails, eventually to exit. In that process the product price, the interest rate, the wage level and employment of, and the quantity supplied by individual firms, are determined. The Ballot & Taymaz (1998, 1999) R&D based learning and innovation module is based on genetic algorithms of the kind now used in the exploding field of AI applications. With exit endogenous from the beginning, and entry since Hanson (1989) & Taymaz (1991a), the population of firms is also endogenous. The (management of the) firm does this by exercising a Maintain or Improve Profits (MIP) principle (Eliasson, 1976a, p. 236, 2017b), that involves climbing up ex ante perceived profit hills, that however change endogenously through all the profit hill climbing going on in the economy, forcing revisions in plans and in the ex ante conceptualised profit hills from quarter to quarter. In Eliasson (1976a, chapter XI) a model of firm behaviour based on the MIP principle was presented, a modified version of which has been worked into the Reference firm model. Implementing the MIP principle can be shown to correspond to ex ante profit maximising search behaviour of the individual firm, which can however never be successfully achieved ex post because of the ongoing competition, structural reorganisation and relative price change that constantly force change in firms’ perceptions of the future, and mistaken production planning, pricing and investment decisions.

Heterogeneity among firms is introduced when the model economy is loaded with Planning Survey real firm data to define the initial state for simulation experiments. Heterogeneity is maintained through investments, R&D and new technology innovations in individual firms, and innovative entry. Heterogeneous firms, living under the rules of Schumpeterian creative destruction (Table 1) lead a precarious life in the model. They constantly have to respond to actual and imagined challenges from incumbent firms or potential entrants, realistically expecting at least some to be superior performers. The individual firm simply has to innovate successfully, or its very existence may be at peril in that they may eventually be forced to exit. There is no equilibrium resting place for a firm. Thus model economy growth is pushed by endogenously enforced entrepreneurial competition based on a rationally perceived challenge that both maintains heterogeneity of the firm population, and moves a competition driven Schumpeterian creative destruction growth process among firms based on selection (firm turnover), as stylised in Table 1, see Eliasson (1996, 2009a, 2017b).

Growth through Schumpeterian creative destruction and selection.

| 1. | Innovative entry enforces (through competition) |

| 2. | Reorganisation |

| 3. | Rationalisation, or |

| 4. | Exit (shut down and business death) |

-

Source: Eliasson (1996, p. 45).

2.2.2 Ex ante plans, ex post outcomes — the Wicksellianl Stockholm School connection

According to Ohlin (1937), the origin of Stockholm School Economics is to be found in Wicksell’s (1898) embryo of an aggregate analysis of production, Myrdal (1927) pioneering thesis on the role of expectations, and the period analysis of Lindahl (1930, 1939). Expectations, ex ante plans and ex post outcomes of individual firms constantly differ in the Reference model economy, experiences that are fed back period by period. The dynamic market coordination of the Reference model economy is regulated by a dozen time reaction parameters. Experience from mistaken expectations, and failure to realize plans, affect next period plans of firms, and so on, and critically influence the dynamics of the entire model economy. They are also the most difficult to calibrate. Measurement and calibration errors may give rise to turbulence and cumulative economic systems behaviour that has no economic foundation.

The time reaction parameters are the source of endogenous growth. They regulate the longer term reversals of the model economy that sometimes follow after a long and rapid expansion phase, when the economy has been operating close to capacity limits, when its stability is sensitive to even small disturbances (Eliasson, 1984). Because of its non-linearity and initial state dependency, the Reference model now and then enters phases of chaotic economic systems behaviour that may be difficult to distinguish from stochastic behaviour (Carleson, 1991). The flows of sequential feedback, being generated within this deterministic and highly non-linear selection-based economic system, are therefore not stochastic even though they may appear to be. I have been firmly against enforcing (on the model) a stochastic feedback flow of differences between ex ante plans and ex post outcomes, a suggestion that has frequently surfaced in academic discussions, to make the model more amenable to formal equilibrium analysis.

2.2.3 Income generation and demand feedbacks — the Keynesian connection

The economy wide perspective is achieved through a public sector that collects taxes on all wages and capital income generated in the model, and generates public consumption (Eliasson, 1980c), and a Keynesian private demand function defined as a non-linear version of a Stone-type household expenditure system that feeds back into the markets of the model, or abroad as imports.7 The initial database is stock and flow consistent from the individual micro level up to the National Accounts (NA) level, and that consistency is maintained quarter by quarter throughout the simulation. The individual firm exports some of its production if it finds that profitable. Differences in domestic (endogenous) and world market (exogenous) prices regulate imports into the markets of the model. Monetary flows across borders are regulated by differences between the endogenous domestic interest rate and the exogenous foreign rate.

A typical feature of the Reference model is expectational overshooting and longer term reversals (negative feedbacks) of strong cyclical booms and recessions occasioned, for instance, of an exogenous input of expectational, technological or policy origin. The economic reactions to the oil price shocks of the 1970s simulated on the Reference model, and global inputs of technological advance (Albrecht, 1978; Eliasson, 1978a, 1979).

Such “growth cycles” are mathematically related to the mechanisms underlying the Le Chatelier principle in, for instance, hydrodynamic systems, stating that whenever a system in equilibrium is disturbed, the system will adjust itself in such a way that the effect of the change will be reduced or moderated. In the Reference model these adjustments are occasioned by the combination of Keynesian demand, and Stockholm School expectational feedback. Determining the parameters that regulate these feedbacks is the most challenging estimation problem of the Reference model. These time reaction parameters strongly impact the economy wide dynamic cost benefit calculations discussed in Section 6 below.

2.2.4 Technological change and economic growth — the Kaldor connection

Technology has been an upper restriction of growth of the model economy, to begin with in the form of an exogenously growing global pool of “best practice technologies” that firms individually tapped into endogenously through their investments. That pool of best practice technologies was empirically studied historically by Carlsson (1981) and Carlsson et al. (1979) in a survey to the members of the Swedish Academy of Engineering Sciences. For many years the projected trends in those data were used as exogenous productivity inputs in new investment vintages, until Ballot & Taymaz (1998, 1999) endogenised firm innovative behaviour around the trend, using genetic network search algorithms that were fed by endogenous R&D investments. Firm innovation strategies could be designed to be incremental or radical8, to raise firm capacities to innovate (learning) and to discover new profitable technologies (innovation). This capacity of the firm to innovate relates to the Neo-Kaldorian branch of ABM inspired by Kaldor’s (1957) Harrod type Keynesian growth model.9 In this model technical change was related to the rate of capital accumulation and structural change (see for instance Lorentz & Savona, 2007; Cantner et al. 2009).

The integration of the modules of the Reference model is graphically pictured in Figure 1, which largely speaks for itself. The business firm module is shown, and how it interacts with all other firm modules in markets.10 The critical expectational feedback of individual firms between ex ante plans and ex post outcomes, characteristics of the Stockholm School, are pictured within the shaded rectangle to the upper right, and the Ballot & Taymaz (1998) Schumpeterian learning and innovation module, which replaced the original more primitive “Kaldorian” technology module, is indicated by arrows at the top left. The quarterly Keynesian demand feedback can be seen in the lower right hand corner. Double lined rectangles identify the three markets of the model, and the three important exogenous inputs (foreign prices, the global interest rate and global best practice technology in each industry) are underlined in their respective boxes.

Most important for the evolution of the Reference model economy is that the state space, or “opportunities space” of the model is endogenously expanding as firms explore it and discover new opportunities, what I call the Särimner proposition11, thus ensuring that the interior of the opportunities space can never be fully researched, and that firms stay for ever ignorant of possible circumstances (in the model), that may force their demise, and that the Reference model will never collapse into a “full information” static neoclassical equilibrium.

2.2.5 Market self-coordination of ignorant agents — the notion of equilibrium revisited

The Reference model is economy wide, dynamic and agent-based. Coordination of agent behaviour is endogenous through market competition, and achieved without the help of a central planner or the fictitious Walrasian auctioneer. This is achieved despite agents being grossly ignorant of circumstances that might threaten their very existence, a theme pursued in Eliasson (2017b). Market self-coordination is costly (transactions costs) notably through business mistakes, and market clearing is never achieved. The model economy still evolves endogenously through time, and if environmental circumstances are right, will do so for ever. Such self-equilibrating models not only give rise to new estimation problems, that you do not encounter in equilibrium models, but also require a different equilibrium concept than the conventional solution to an equation system.

This economy wide dynamics of the Reference model economy originates in the integration of the Schumpeterian/Austrian, Wicksellian/Stockholm School, Keynesian, and Kaldorian principles, intermediated through the three markets of the model (product, labour and financial, see Figure 1). This integration gives rise to the “Surprise Economics” examples discussed in Section 5, which also illustrate the risks of misunderstanding inherent in partial models. The point to be made is that you need all four familiar partial principles to generate such credible dynamics, and when the four are integrated in markets, they together create sufficient non-linear complexity in the form of endogenous structural change and agent selection (entry and exit) to eliminate any excuse for making the assumptions necessary to return the model onto the template of a stochastic general equilibrium (GE) model as stylised below. This unfortunately also makes the integrated model hard to estimate.12 Below the principal assumptions of a rational expectations neoclassical GE, or for that matter a dynamic stochastic general equilibrium (DSGE) model are listed (Eliasson, 1992, p. 256):

Behaviour: Agents maximise expected utility – max E[U]

Expectations are formed from subjective probability distributions conditioned by “all available information” (Ω), that is, historic realisations of all stochastic variables – E[X]=P(X|Ω)

Agents form (from points 1 and 2) ex post probability distributions that are identical to the subjective probability distributions under point 2 – ex post P[X]≡P(X|Ω)

Ex post P(X) are stationary

§ 4 is the assumption needed for statistical (“econometric”) learning, something made clear already by Haavelmo (1944).13 A steady flow of observations from the realisation of the stationary P(X) process will eventually, and with the precision desired, allow unbiased estimates of the parameters of P(X). § 3 and 4 are stochastic equilibrium conditions. § 3 hides the rational expectations type equilibrium conditions of the neoclassical, and the DSGE models, that are violated in the Reference model. This is so since (among other things) Ω ex ante is practically always ≠ Ω ex post, because of all the Schumpeterian, Stockholm School, Keynesian and Kaldorian structure-changing dynamics going on at the micro market level, making the population of firms endogenous. Hence, to be called dynamic, it should be mandatory in economic models to determine Ω endogenously and thus prevent ex post P(X) from being stationary (§4), even though Exp (X) is stationary, which has been suggested, and which is a perfectly reasonable assumption. Critical for this “anti-equilibrium” proposition are the Wicksellian/Stockholm School expectational feedbacks experienced by individual firms, a property, that together with the Särimner proposition becomes a well-defined partition between an evolutionary and a neoclassical model (Eliasson, 2017b).

Selection of agents through entrepreneurial entry competition and endogenous exit together with individual investments that will then bring in new technology in firms, and self-regulation of the model economy through agents’ competition in markets, together make the model initial state dependent, exhibiting phases of cumulative chaotic behaviour, and probably not ergodic, with the estimation problems that come with that property (Grazzini & Richiardi, 2015; Richiardi, 2013). Since the Reference model cannot be solved for an exogenous equilibrium trajectory or a final equilibrium end station, a corner stone of standard neoclassical economics has been removed. It is interesting to note from Fontana (2006, 2010) that, as this became gradually apparent at the Santa Fee Institute, the early enthusiasm among some of the founding fathers for its research ambitions in complexity economics, cooled. There is, however, no need for imposing an “assumed empirical” rational expectations equilibrium as Kydland & Prescott (1982, p. 1359) did in the “first” DSGE model. The economy wide dynamics of the Reference model is in fact normally well-behaved and self-regulated without any assumptional tricks needed, such as introducing a Walrasian central planner that does the coordination job free of charge (zero transactions cost assumption). But in order to discuss equilibrium without a Walrasian central planner, the definition of equilibrium has to be redefined, and the “GE feedback”, often lacking in partial models, will be very differently represented.

Any credible representation of an economic system has to possess some inherent equilibrating properties, but not necessarily an exogenous point or trajectory that the model can be solved for, and it is debatable whether a good economic model should be ergodic.14 A model of a self-regulated market based economic system has to be capable of dynamically operating without imploding under normal circumstances, and endogenously growing under some circumstances, without growth being propped up by an exogenous trend.

This endogenous self-regulation through supply and demand interaction in markets draws transactions costs. The most important transaction costs come in the form of “business mistakes” occasioned by discrepancies between ex ante plans and ex post outcomes at the agent level, that because of the non-linear complexity of the Reference model are unpredictable. Business mistakes so defined represent ex post outcomes that fail to be up to ex ante expectations and plans. Since entrepreneurial entry is both a growth-promoting and failure-prone activity, the rate of entrepreneurial entry (endogenous in the Reference model, but sensitive to market incentives that can be influenced by policy), and firm turnover have been demonstrated on the Reference model to be growth-promoting over the longer term up to a limit. Differences between ex ante plans and ex post outcomes are intermediated in financial markets, and therefore appear as a normal transactions cost for economic growth (Eliasson & Eliasson, 2005; Eliasson, Johansson, & Taymaz, 2005).

Clower (1967) made the lowering of transactions costs the rationale for the existence of money, as a special commodity that facilitated trade. Dahlman (1979) narrowed down the definition by specifying that the interesting transactions costs were those incurred through imperfect information, or (my point) differences between ex ante plans and ex post outcomes, that is mistaken resource allocations. This is both interesting and important in that transactions costs so delimited become unpredictable, and, as aptly noted by Dahlman (1979) both indeterminate and impossible to deal with by the traditional mathematical tools of the economist. The reason for making a point of this in the context of the Reference model is not only that such transactions costs are only tractable by mathematical simulation. They are also the important element of economic dynamics that should not be eliminated by simplifying assumptions.15 The historical and surprise economics applications of the Reference model in Sections 5 and 7 illustrate.

With his “Nirvana Fallacy” story, Demsetz (1969) had already delivered a devastating verdict on the GE model, making it a logical fallacy of using the zero transactions cost world of GE theory the reference for welfare analysis. Allowing transactions costs due to imperfect information, or for that matter ignorance (causing “business mistakes”), that are not proportional to the regular transactions costs of trading, etc., implies that the equilibrium not only becomes indeterminate, but ceases to exist, as an operating domain of the model. To study that non-linear dynamics, mathematical simulation becomes the analytical method of choice, and some measure of economic systems stability the relevant welfare concept (Eliasson, 1983, 1984, 1991a). An interesting policy exercise then becomes tuning the institutions of the model so that the time reaction parameters automatically keep the variables of the entire economy evolving along a reasonably fast growth path, but within local bands of variation that are acceptable from a welfare point of view, and not so fast as to force the economy into collapse-prone depressions every now and then. This would be a formidable simulation design to engineer, but a reasonable one, and far more reasonable than trying to place the model economy on a welfare maximising equilibrium path that does not exist.

As I will discuss below (Section 5), the self-regulatory properties of the Reference model offer excellent opportunities to study how such a welfare interesting “equilibrating” property could be meaningfully defined. From the point of view of diffusion of economic doctrines in academia it is interesting to observe that both Robert Clower and Harold Demsetz have been colleagues at the University of California at Los Angeles (UCLA), and that Carl-Johan Dahlman obtained his doctorate there. Robert Clower furthermore participated in the first 1977 microsimulation conference in Stockholm, and was generally, since then, very sympathetic to the Reference model and its dynamic properties.

All economy-wide models have to be partial in some sense, but the Reference model, integrating several well-known but partial principles of economics, should be sufficiently general to minimize awkward exogenous misspecifications that would affect the four applications I have chosen to address. The Reference model floats in a global world that is largely imposed by exogenous assumption, even though Ballot & Taymaz (2012) introduced a global model composed of cloned Reference models that trade with each other through technology diffusion. The global environment of the original Reference model was assumed “in principle” to be in static equilibrium. The exogenous variables that impact on the Reference model have been set so that the establishment of one firm in exogenous best practice technology, fetching exogenous global market prices and operating at full capacity, will earn a nominal return on its establishment (“investment”) equal to the exogenous global interest rate. In simulations over historic time (for instance for calibration purposes) we however simply collect historical data on these variables, as we also did on best practice technologies (see above). In future simulations, we project (“forecast”) the exogenous variables imposing those equilibrium assumptions.16 Hopefully those “equilibrium” constraints can be loosened up in the future, for instance using the Ballot & Taymaz (2012) extension to construct an endogenous global technology frontier for domestic firms to tap into.

For the domestic economy, the solution is more straightforward. Simulations start on a historic initial year. The model is loaded with real firm data, including prices, wage levels, profits, etc. These factual distributions across firms on rates of return, productivities, growth rates, etc., for the initial quarters are then endogenously maintained throughout simulations, one important criterion for economic systems stability being that the diversity (heterogeneity) across firms of those distributions does not deteriorate over time (Eliasson, 1984).

3. Terminology – many labels for similar apps

Microsimulation is an apt term for the ambitions I will be advocating, but the microsimulation field has unfortunately branched out in several, sometimes incompatible, directions, some of them voiced in the 1977 Stockholm conference.

The study of “micro-based dynamic economic systems theory and modelling” is my preferred general term. Simulation in the sense of this paper is a mathematical tool for the analysis of complex economic systems, in particular aimed at understanding the long-term evolution of entire economies. Microsimulation, on its side, has become a recognised term both for a method of analysis, and for the study of a particular class of economic models. The latter interpretation therefore understands microsimulation as commonly practiced to be a special branch of Micro to Macro economic systems modelling, or the wider field of economic modelling that we envisioned at the time of the 1977 Stockholm conference. That branch starts from Orcutt’s concept that each micro agent conducts a stochastic experiment, with empirical applications dominated by the study of the distributional consequences for the household sector, notably of tax changes. Market intermediated interactions between those agents are rarely modelled, if at all. Few of the large number of such microsimulation studies have exhibited an interest in the macro outcomes.

The second branch is represented by the Eliasson (1976b, 1977) micro- (firm) based self-coordinated economic systems model presented as the Reference model in the previous section, and Bergmann’s (1974) “transactions” model. Both have economy wide ambitions, as their distinguishing feature, and more importantly, they model the interaction (competition) of heterogeneous agents in explicit markets, and have a theoretical focus on the dynamics of the evolution of complex economic systems. Eliasson has often expressed his goal (for instance Eliasson, 2005b) as that of studying the economy wide consequences of “the birth, the life and the death of firms”, as they compete with each other in product markets, and for resources in labour and financial markets.

Firms are modelled to design business experiments to be tested in competition with other firms in markets, and to react to experiences from realised ex post outcomes, that diverge from ex ante plans, experiences that are fed back through markets to affect next period’s plans. Concerns about a realistic representation of firm behaviour are supported by references both to the Eliasson (1976a) study of business planning practices, as the prototype of their behaviour in the model, and to the specially designed survey to real Swedish firms to obtain a database for the model, including explicitly measured initial conditions for the quarter model simulations. The initial conditions measured are then endogenously updated from quarter to quarter.17 How aggregation of firms’ behaviour up to macro is intermediated in those markets is explicitly modelled.

The 1990s has witnessed a third branch of Micro to Macro economic systems modelling: agent-based modelling (ABM). As far as the ambition has been to model entire economic systems, for instance the Eurace model (Dawid et al., 2016), it comes close to the Bergmann (1974), Bergmann et al. (1980) and Eliasson (1976b, 1977) models. Both Ballot, Mandel, & Vignes (2015) and Richiardi (2013) classify the latter two models as the first economic ABMs, and they are right. The origin of ABM in general is however unclear, but seems not to be economics but rather computer based studies of the behaviour of ant colonies (The Economist, 2013). Fontana (2006) draws the lineage back to von Neumann, cellular automata and the Santa Fe Institute founded in 1984 to advance the understanding of complex multidisciplinary systems (Arthur, 1999), the interaction of heterogeneous micro agents in complex economic systems being one example, very much of what has been going on in the Reference model. When touching down in economics in the 1990s, ABM has been announced as a “new separate” branch of economics. The question therefore is if that literature offers more than a new name for Micro to Macro economic systems modelling published decades earlier.

In post times, Kydland and Prescott (1982) DSGE models have sailed up as a fad in academic economics, but then declined in popularity after the crisis of 2008, observes The Economist (2016, p. 65), central banks being early enthusiastic users of DSGE models. These models have been presented as both dynamic and micro-based macro compared to CGE sector models. Compared to the micro firm, or household based macro systems models, just discussed this is going a bit far, even if heralded as something radically new by many economists (for instance Fernandez-Villaverde, 2010). There are no explicit heterogeneous agents and no explicit markets (only an assumed zero transactions costs rational expectations equilibrium), and no dynamics; all markets are (in stochastic market clearing equilibrium), and when it comes to the endogenous growth cycle claimed to be there, Kydland & Prescott (1982) have plugged in an exogenous trend.18

So this family of economic macro models has little to do with neither economic dynamics nor ABM. Perhaps there is no economics at all in the DSGE model, because assumptions have been substituted for explanation. Day (1996, p. 399) therefore calls such models “statistical characterisations of their trajectories”.19 “The great recession poses a serious challenge to mainstream macro“(read DSGE models) writes Richiardi (2017). The failure of DSGE models in central banks to predict and to support an understanding of the financial crisis of 2008 seems to have caused interest in such models to cool (Goodhart, Romanidis, Tsomocos, & Shubik, 2016).20

Since both the DSGE and the CGE, as well as the K&L models can be derived as special cases of the Reference model (Eliasson 2017a, p. 362), or similarly structured agent-based models, I see no point in making this a special branch of micro-based economic systems modelling.

There is also a fourth branch of Micro to Macro economic systems models with the specific ambition of clarifying the microeconomic foundations of existing macroeconomic neoclassical and Keynesian models, taking the latter for given. That was also one ambition voiced in the 1977 Stockholm Microsimulation Conference. In the light of this paper, this ambition, however, becomes futile. With Reference type models properly established and estimated there will be no need for a separate macro theory. But some of these studies (for instance Fisher, 1972, 1983, Weintraub, 1979, 1983), are interesting for other reasons, because they clarify indirectly, and sometimes in direct words, the shaky foundations of any macro model. Fisher’s (1972) treatise on “price adjustment without an auctioneer” is interesting, not only because it clarifies that, but also because the profession had not yet in 1972, and still not, taken economics beyond the central planner and auctioneer of Walras who coordinates the economy free of charge.21 Weintraub (1983) does not hold back his words when he concludes: Could it be that the Walrasian equilibrium story has exercised such strong influence on a narrow minded profession that “empirical work, ideas and facts and falsification” have been allowed to play “no role at all”?

I will therefore stay with using “Micro to Macro economic systems modelling” as the general term that covers both microsimulation, ABM and the narrow task of clarifying the micro foundations of existing macroeconomic models as special cases. The distinction that should be made is that micro-based economic systems modelling concerns the evolution of an entire economy, featuring deterministic behaviour of heterogeneous agents interacting in markets, incompatible ex ante agent plans that are sorted out by competition and self-coordinate the economic system (without, however, clearing the markets) such that prices and quantities are determined, and realised behaviour fed back sequentially over time. Each agent thus faces the actions of all other agents as a critical part of its economic environment.

Rapid advance in computer capacity and improvements in simulation techniques have gradually made complex models of the Reference model type tractable for mathematical simulation analysis. Only recently, however, has computer capacity and databases for empirically credible estimation of such models began to become available, allowing modelling to move from the low ambition of generating “stylised facts”, by way of the very early, and for the time advanced calibration model of Taymaz (1991b), developed for the Reference model, to not yet achieved proper parameter estimation. The latter should be a priority concern to confer the empirical credibility on these models that they deserve (Eliasson, 2016; Hansen & Heckman, 1996; Richiardi, 2013). The compilation of high-quality databases on taxonomies tailored to the problems addressed probably is the most difficult of the two obstacles to progress. Ultimately, however, there should be little use for a separate branch of economics called macroeconomics.

4. What are these complex, data demanding and difficult to estimate agent-based economic systems models good for?

The question “What are these complex and difficult to estimate models good for?” is instead a fundamental concern. And it can only be answered with: “What are you interested in?”. There are a number of questions for which a meaningful answer requires the analysis to be based on an economy wide, dynamic and difficult to estimate agent-based model. However, to handle them all within the same general model structure becomes a killing proposition. Economics is a sufficiently large field to make the ambition to put together a universal theoretical construct that holds intelligible answers to all important socioeconomic questions meaningless, not to mention all psychological, sociological and historical dimensions that impinge on the economic system, and that are most often neglected altogether by economists. This is to say that economic models will always be purpose dependent, and require that the researchers present a convincing empirical argument for the prior, and thus always partial model choice they make. This does not exclude that a more general and perhaps verbal “theory” can be used to facilitate that choice, and to help interpret the results achieved on the basis of a partial model. In fact, that prior choice to arrive at an empirically credible partial model for quantitative analysis, is its most important part. It defines the “art of economic analysis” (Eliasson, 1992, 1996, 2005a, 2017b).

4.1 Good micro-based economy wide systems models make macroeconomics superfluous

To put it bluntly: Macroeconomic theory or models can never explain macroeconomic evolution, only describe it, at best (Eliasson, 2003). For an explanation you have to go down to the microlevel of behaving economic decision units and explicitly model how their behaviour in markets and hierarchies moves the evolution of the entire model economy, and then sum up (“aggregate”) the final outcomes (Eliasson, 2017b). Macro output so defined and empirically represented will then be a measure of something you desire to see, for instance a welfare index, or a quantification of available resources in an economy. When the micro foundations of heterogeneous, ignorant and behaving agents have been properly modelled and the final outputs summed up, there is no need for a separate macro theory. A separate macro theory may be needed if the micro foundations have been badly understood and erroneously represented, which Citro & Hanushek (1997) argued (from their experience with household data) would long be the case. While their conclusion was to go for improved data collection, instead of building empirically not well-grounded models that were less data demanding, I would add that good models can be built even though we do not yet have all the data needed. They may help specifying the data that needs to be collected.22 Furthermore, and as a corollary, the interesting welfare concept to study will be the stability and predictability of the economic environments of micro agents, not the maximum output per capita, or something like that, which is a concept that can neither be defined nor determined in a well formulated micro (agent) based evolutionary economic systems model of the kind I am using as Reference model (Eliasson, 1983, 2015, 2017a).

Feldstein (1982, p. 829) insisted in a much referred to article that “in practice all econometric specifications are necessarily “false” models”. There is therefore no hope that through them truth will be found. “The applied econometrician, like the theorist” continued Feldstein “soon discovers from experience that a useful model is not one that is “true” or “realistic”, but one that is parsimonious, plausible, and informative”. The question, however, still is what to mean by informative, and especially if parsimonious means wrongly specified.

Quite often a model is said “to work”. That proposition is often associated with Friedman (1953), who argued that as long as the model predicted well, its credibility should not be put in doubt, however “false” its specification. The question is what it means for a forecaster (or a decision maker who uses the forecast) to keep the model as long as “it works”, and then reject the model when it stops working, if the incident that caused the rejection is exactly what the decision maker wanted to be forewarned about? The Reference model is a particularly apt tool for responding to such questions. It exhibits long periods of seemingly steady state macro growth behaviour, during which standard, parsimonious macro models would work well predicting growth, only abruptly to break into “Surprise Behaviour” (Section 5) originating in non-linear market selection dynamics that no macro model would be capable of reproducing or forecasting. That is the kind of phenomenon a user of forecasts would like to be forewarned about, and for him to warrant the term “to work”.

Economy wide, dynamic and agent based economic systems models will normally be complex. Modelling the economic systems consequences of “the birth, the life and the death of firms” (Eliasson, 2005b), explicitly representing the interaction of competing heterogeneous agents across markets and over time, moved by innovative entry (“entrepreneurial competition”) and forced exit (“selection”), as in the Reference model, always means difficult non transparent complexity. For the life of business entities to be credibly represented “economic mistakes” in the form of deviations between ex ante plans and ex post outcomes have to be explicitly modelled as normal transactions costs (the Stockholm School connection). To warrant the term dynamic, period to period feedback from ex ante plans to ex post outcomes have to be explicitly accounted for, and since such feedbacks may be large their impact on next period investments, and production structures also have to be explicitly represented. Growth moved by entrepreneurial competition and market based selection (firm turnover) means irreversible systems evolutions and endogenously changing relative prices. Such models are non-linear, initial state dependent and exhibit phases of chaotic behaviour, as well as suffer from all the problems of parameter estimation and empirical credibility that follow. If you happen to be interested in the above dynamics, you also have to brace yourself for the consequent model complexity.

4.2 Minimum requirements of specification

Each question asked requires that a minimum of empirical properties characterise the mathematical model to make it a credible instrument of analysis, and the four problems chosen for illustration in the following four Sections 5, 6, 7 and 8 are particularly demanding in that respect. The minimum features required are not only dependent on the problem you are interested in seeing clarified, but related to the integration of competing heterogeneous, but ignorant market agents, sequential period to period market feedbacks of previous experience of agents, and because of the consequent complexity of market dynamics, endogenous structural and relative price change, causing systematic differences between ex ante agent plans and ex post outcomes (“economic mistakes”). This is a characteristic of the Reference model that is suspiciously absent from most econometric models. Such non-linear and initial state dependent agent-based models featuring endogenous populations of agents, and because of selection, irreversible economic systems trajectories, in which small, at the time minor events, may with time cumulate into major (bad or good) occurrences. These are model features that have been removed by assumption from post war neoclassical economics, in my mind to facilitate simple mathematical, but unfortunately “false” representation. These empirically relevant characteristics also unavoidably turn economic theory into a genuine empirical science, which is good. So what are the great benefits of all this added complexity and difficulties of both analysis and parameter estimation, that make them worth the effort to build and install?

Today we have better tools than were available when those concerns were formulated. Mathematical simulation of Micro to Macro economic systems models does not restrict economic theorising to narrowly and false (in most contexts) partial, static and linear models. Rich in empirical content, high dimensional models can be explored for surprise theoretical conjectures (see Section 5). The dynamics of complex interactions of agents in markets featuring sequential feedbacks of previous experiences and selection can be studied, and the consequences of endogenous changes in the population of agents accounted for. The role of initial conditions (“history”) for future systems evolutions can be studied (Section 7). An acute problem for decision makers in the business community in particular, but also frequently for the policy maker, is lack of data on the current situation. Fragmented case information may exist and the decision maker needs to form a coherent view of the economy wide dynamic implications of that information. Normally s/he has arrived at that through combining whatever personal experience there is with guesses. Generalising from case studies using a complex agent-based device like the Reference model is a systematic method of doing that, which could in principle become a new field in economics (Section 8), perhaps even for guidance in acute decisions situations, but more importantly to practice a systematic holistic approach to decision making.

The back side of the enhancement of complexity and demands on theoretical analytical capabilities is mounting estimation difficulties, and the problem that the model will be compatible with “any given” set of facts (Gabaix & Laibson, 2008). Some may say that this is a practical problem only, and Feldstein’s (1982) argument was that because of the “complexity of economic problems, and the inadequacies of economic data” all econometric models were necessarily false, and could not be explored for some therein hidden “truth”. Lacking such a model one could instead learn from “the use of several “alternative” false models” by combining knowledge, theoretical insights and judgement (op. cit. p. 283). Combining several partial, and therefore individually false models could produce a better, and less false understanding of a policy situation. But this “combination” has to be done right. While some practitioners in the economic analysis field may be in command of the necessary intuitive faculties, there is no way to tell, only to believe. Models of the Reference type, on the other hand, have been designed for a systematic combination or integration of case information, which should be a great merit.

Theoretical physicists have tried for decades to merge the four partial theories dealing with the strong, the weak, and the electromagnetic forces and with gravity, hoping to come up with a unified model of improved understanding. And with modern simulation mathematics at our disposal, the scientists do not have to hesitate because of mounting methodological complexity. The empirical credibility of such exercises of course depends on the credibility of the prior model specification and the parameter estimates. But the conjectures simulated might still suggest where to go and look for empirical verification which is very much what is being done in physical sciences. In the case of the Reference model, to make my point, this is far less costly than building the big colliders to look for the exotic “spartides” suggested to exist by even more abstract models in theoretical physics.

The large number of parameters and variables in Micro based Macro economic systems models, such as the Reference model, means that large numbers of conjectures can be generated, studied and passed empirical judgements on. And even if you as an analyst may not be ready to pick one conjectured scenario over another, you may study the originating circumstances of a number of bad outcomes, and warn your master political decision maker how s/he might guide the economy around them (see Section 7). And you do not have to disappear into empty abstractions. The Reference model of Section 2 in fact integrates the partial conclusions of both Schumpeterian, Keynesian and Kaldorean economics with the more abstract thinking of the Stockholm School economists into a mathematical structure that includes an eleven sector computable general equilibrium, or a L&K model as special cases (Eliasson, 2017a, 2017b).That is in contrast to so called pure mathematical economics, the models of which are constrained to make it possible to identify the conclusions you can draw from a minimum of trivially true axioms. Disregarding the question how economically interesting such analyses are, and can be demonstrated to be, this is not meaningful in the high dimensional model world we are discussing. A minimum of empirically credible restrictions on the parameters of the models have to be ascertained for the analytical results to be economically interesting. So the credibility problems simply have to be overcome by good measurement, new data, computer capacity and innovative advance in inference theory.

I will address this complex problem in terms of four very different theoretical and empirical problem areas, and ask what is required as a minimum of an economic model to be capable of meaningfully responding to the relevant research questions. As it happens I will be able to refer to a Reference model that both exists, is capable in principle of handling all those problems, and has been used on several, as references will show. Such conducted mathematical simulation analyses include the derivation of economy wide, dynamic economic systems evolutions based on explicitly modelled micro market behaviour, for instance the emergence, or non-emergence of a “New Economy” based on new computing and communications technologies. The following four fields of application will be addressed in turn: